Augmenting Visual SLAM with Wi-Fi Sensing For Indoor Applications

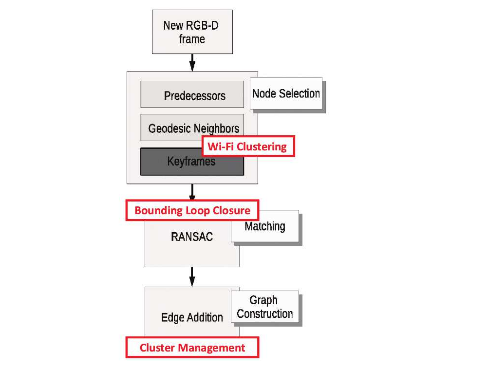

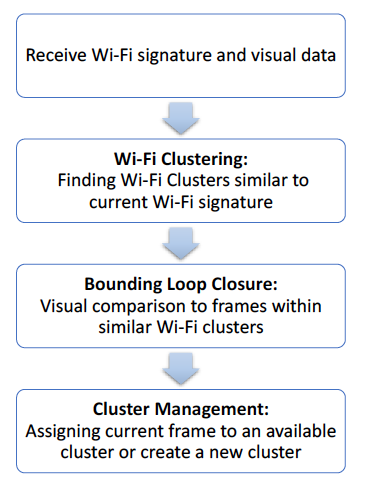

Recent trends have accelerated the development of spatial applications on mobile devices and robots. These include navigation, augmented reality, human-robot interaction, and others. A key enabling technology for such applications is the understanding of the device's location and the map of the surrounding environment. This generic problem, referred to as Simultaneous Localization and Mapping (SLAM), is an extensively researched topic in robotics. However, visual SLAM algorithms face several challenges including perceptual aliasing and high computational cost. These challenges affect the accuracy, efficiency, and viability of visual SLAM algorithms, especially for long-term SLAM, and their use in resource-constrained mobile devices. A parallel trend is the ubiquity of Wi-Fi routers for quick Internet access in most urban environments. Most robots and mobile devices are equipped with a Wi-Fi radio as well. We propose a method to utilize Wi-Fi received signal strength to alleviate the challenges faced by visual SLAM algorithms. To demonstrate the utility of this idea, this work makes the following contributions: (i) We propose a generic way to integrate Wi-Fi sensing into visual SLAM algorithms, (ii) We integrate such sensing into three well-known SLAM algorithms, (iii) Using four distinct datasets, we demonstrate the performance of such augmentation in comparison to the original visual algorithms and (iv) We compare our work to Wi-Fi augmented FABMAP algorithm. Overall, we show that our approach can improve the accuracy of visual SLAM algorithms by 11% on average and reduce computation time on average by 15% to 25%.

Contributors

Publications

-

Augmenting Visual SLAM with Wi-Fi Sensing for Indoor Applications

Augmenting Visual SLAM with Wi-Fi Sensing for Indoor Applications

Zakieh Hashemifar , Charuvahan Adhivarahan , Anand Balakrishnan , Karthik Dantu

Autonomous Robots, 2019 , pp. 2245–2260

Project Page | PDF| Bibtex